When you start PostgreSQL`s instance (via pg_ctl start) the main process of the databases is started. In the past versions it was known as the postmaster process and now days it is called postgres. When this processes is started it allocates memory for two main things : shared memory and other background processes. The shared memory is a memory that the database uses mainly for cashing. Its main goal is to reduce the I/O operations and as a result of that improve the performance of the database. The other processes that the postgres process starts are responsible for the ongoing work of the database.

PostgreSQL`s architecture is based on a client/server communication. In the server side there is a server process that manages the files, database operations, connection and so on.. On the client side there is a tool/program which can be a graphical tool (gui) like pgAdmin or a command line tool(cli) like psql that is repnsible for connection the database and allowing the user to do some database operations. PostgreSQL supports multiple connections to the database. Each client that is trying to connect to the database is sending a request to the main postgres process. The postgres process allocated for each client a server process that handles the clients requests. Each server process is allocating an amount of memory from the ram that is called "Process local memory" and is used by

.the server process for his daily work

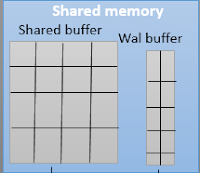

Shared memory

The shared memory consist of 2 main parts : shared buffer,wal buffer. The shared buffer contains data (pages) that is accessed very often(by one user or many..). You should ask your self - how can we benefit from saving that data in the memory ? Take a minute and think about it before you read the anwer. Well, if you need to get access to specific data very often it means that you access the datafiles in the file system and you generate I/O. Instead, we can store part of it or all of it (depends on the size..) in the memory. Reading from memory is much faster than reading from disk (lets assume its not ssd) and therefore we will have better performance. In addition, we reduce the amount of I/O on disks and that will have an impact on other operations that need to do big I/O.

The next one is the wal buffer. If you are familiar with oracle database then wals are corresponding to oracle`s redo logs. In general, wals are files that contains the changes that happens in the database. It is usefull in case we want to restore our database. In that case we can use the wals to "check" what changes were made and roll back the database. Before the changes written to the wal files they are written to the wal buffer. If we will write every change that happens immediatly to the wal files we will have alot of I/O operations on disk. Therefore, we write the changes to the wal buffer and in predetermined point in time it is flushed from the wal buffer to the disk.

work_mem & maintenance_work_mem

The work mem is a memory that is allocated for sort or related join operations. We can set its value in one of postgresql`s config files(postgresql.conf) that I will describe in the next post. This memory is per operation, which means that if we will have multiple queries on same time that involves joins or sorts each operation will have its own memory for itself and its size will be work_mem. The maintenance work mem is a memory that is allocated for maintenance operations like : building an index, alter table, vacuum. Like the work_mem parameter this parameter is also set in same config file(postgresql.conf).

Processes

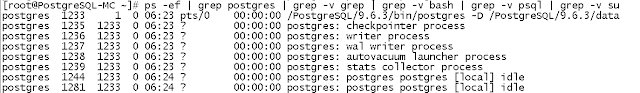

(All the processes that related to postgresql on my machine)

Now lets focus abit on the most important processes in our instance. I'm going to explain the basics on each process. Each process has also many config parameters in the config file of postgresql (postgresql.conf) which I wont mention in this post and I will cover in the next posts.

Lets start with the checkpointer. When a user perform changes to data that was loaded to the memory(shared buffer to be specific) that data is marked as "dirty". When I say data I mean blocks because PostgreSQL always writes and reads blocks . The checkpointer is responsible for writing those dirty blocks into the data files in the file system(step 1 in the diagram). After it flushes all the data to the disk the checkpointer process writes a special checkpoint record to the wals(step 2 in the diagram). This record guarantees that all the changes that happened before that this record was written to the wal are already in the data files. In other word, in case of a crash, the database can look on those checkpoint records and start applying only the changes after the last checkpoint record. Lets use the diagram as an example : Lets assume that after checkpoint #2 I did an insert and after it a delete. Those changes were flushed from the wal buffer to the wal files(commit). Suddenly after the delete the database crushed. When I started the database I knew that all the data before checkpoint 2# is already in the datafiles. However the insert and the delete that I did after the checkpoint werent in the datafiles. So in order to restore the database I just had to apply the next 2 rows in the wals that come right after the "checkpoint #2" record.

Lets move on to the next process - bg writer. The background writer also known as the writer process. The writer process is responsible for writing specific dirty blocks from the shared buffer to the disk based on a unique algorithem. This process purpse is not flushing dirty blocks into the disks but rather make sure that there are enough free buffers in the shared buffer. In addition, because the bg writer also flushes dirty blocks into the disk it reduces some of the work for the checkpointer.

In the section on the memry of the instance I explained what are the wal buffer and the wal files. The wal writer process is responsible for writing the changes that are written to the wal buffer into the wal files in case of a commit. Well to be honest, its not so accurate because its operation depends on many parameters but I just want you get the idea of its purpose. This process is crucial for our database because as I mentioned earlier the wal files contain all the changes that happened in the database.

The stats collector as you can guess is reponsible for gathering statistics about the postgres instance in our server. It collects statistics on tables (like num of rows,vacuum and analyze operations), indexex,functions and so on..

The last 4 parameters are wal reciever,wal sender,autovacuum and archiver. All those 4 parameters arent a must and they are optional. The wal reciever and wal sender are necessary in case we are using replication. They are responsible for sending(MASTER side) and reciving the wals(STANDBY side). The archiver is a process that generates archives from the wals. We can save those archives somewhere and in case we want to restore out database to a specific point in time we can use them.

In postgres when you delete records from a table those records are not deleted immediatly from the data files. Those records are just flaged as "deleted" but they are still exist. In other words, even after a delete operation on a table the disk space that those records occupied is not freed. The vacuum process marks the locations of those "deleted" records as a location that can be used to store new data that is entered into the table. When new data is entered into the table (insert) it will overwrite the "deleted" data and we can save space. In addtition,Vacuum also gather statistics on the table. The vacuum process can be run simultaneously only to dml queries! There is another mode of vacuum that is called vacuum full. Vacuum full has the same idea behind him but the differences are that it frees the space that was occupied by those deleted records and that disk space is given back to the operating system and is not exclusive only for that table. Moreover, vacuum full requires a full lock on the table and that is why it is not so recommended to use it in production environments. The autovauum process is reponsible for scheduling the vacuum operation based on threshold of dead rows and more interesting parameters that are available in postgresql.conf.

(An illustration of the vacuum process)

Thats all for today. In the next post I will talk about the physical structure(directories and files structuree) of the database, how to connect to the database with psql and pgadmin, understand some of the basics of sql. In addition I will explain about tablespaces,schemas and the important catalog and information_schema tables. If you think that I should add or edit something feel free to write it in the comments.

nice effort....expecting more in coming days....lets do create one telegram group aswell

ReplyDeleteThanks for the feedback

DeleteSure , Please It will be very helpful

DeleteThanks a lot

ReplyDeleteWelcome

DeleteExcellent !!! Please provide articles on Installation, backup/recovery, replication etc

ReplyDeleteYes , In coming Days I going to cover all topics of Postgresql

ReplyDelete